An article by Aman Majmudar

In July, news that shook the field of Alzheimer’s disease research emerged: Matthew Schrag, a neurologist at Vanderbilt University, tipped off the scientific community that a groundbreaking 2006 study may have falsified data and images, calling its findings — and much of the research based on those findings — into question.

Scientific misconduct, however, is more common than one would hope, and the Alzheimer’s research incident was just the latest example to call attention to the troubling practice. In 2015, the journal Science retracted a paper after a graduate student uncovered improper survey methods. That same year, news came out that a scholar fabricated data related to cancer research to aggrandize genomic technology he had developed.

According to a 2022 study conducted by the Dutch National Survey on Research Integrity, more than half of the researchers surveyed engaged in “questionable research practices,” such as selectively choosing references to reinforce their results. In the life and medical sciences field, 10.4 percent of respondents either fabricated or falsified data in the last three years — the highest prevalence rate out of all the fields studied.

The case of the 2006 study in particular reveals the rippling consequences of fraud. It was, according to Science Magazine, considered a “smoking gun” confirming the theory that the Aβ*56 amyloid protein, formed from smaller, healthy proteins in brain tissue, caused dementia, and its results inspired a new avenue of scientific research and funding, including pharmaceutical companies developing drugs aiming to break amyloid protein down or prevent its formation to treat Alzheimer’s disease.

But Sylvain Lesné, the paper’s primary author, seems to have presented the hypothesis as more promising than it really was. Even as the amyloid-protein theory has gained momentum, studies exploring it have yielded mixed results. Almost half of the funding for research on Alzheimer’s — $1.6 billion — from the National Institutes of Health for this fiscal year went to amyloids-focused research and drug development. If the field had examined his study with a more critical eye, it could have directed money towards more promising research.

Since these findings, other works of Lesné are also being investigated for scientific misconduct. Yet why did it take 16 years for scholars to flag this potentially fraudulent, and yet highly influential, publication?

While there are safeguards in place to prevent and catch fraud — articles in prestigious journals are subject to peer review, the primary method for ensuring quality control; the U.S. Office of Research Integrity, or ORI, investigates research misconduct — they are clearly inadequate.

Journals should reduce the need for whistleblowers by implementing greater integrity measures early in the publication process. And the scientific community should encourage an environment where fellow researchers can, without delay, come out as whistleblowers when necessary.

Ideally, studies with misleading or fraudulent data would be identified before being published. But the current system for checking submitted papers, which relies on peer review, is inadequate. Researchers appear to agree: A 2012 international survey found that most academics surveyed believe that it is very difficult to detect fraud during peer review. And in a recent interview with The Scholarly Kitchen, science historian Melinda Baldwin noted that “peer review is not set up to detect fraud,” and that most cases of fraud are detected after publication.

In fact, peer review even at top journals has failed to detect egregious, if not obvious, fraud. The Lancet, for example, had to retract an article shortly after it was published in May 2020 because it claimed with misleading data that hydroxychloroquine, an antimalarial drug, caused death in Covid-19 patients; earlier that month, the article’s author published other fraudulent research in The New England Journal of Medicine, which soon retracted the article.

These studies’ results had big political implications, and the attention they received allowed fraud to be uncovered quickly without a whistleblower needing to come forward. This isn’t the case for most studies, however. Scrutinizing and questioning research’s integrity should be more normalized, and not just for research that receives worldwide attention.

Because peer review is inadequate, it is important to have ways to quickly detect fraud post-publication. This is where whistleblowers come in, and it’s important that they feel safe and emboldened to come forward when necessary. Still, a 2016 article in the “Handbook of Academic Integrity” highlighted a series of case studies that demonstrate whistleblowers can face challenges and retaliation for coming out. Debunking other scientists’ work can bring unpopularity and notoriety, as it did for Schrag.

This also suggests the reason the ORI is insufficient: Misconduct allegations often come from the scientist’s institution, where whistleblowers likely face social pressure to not report their colleagues lest they face pushback and isolation.

Moreover, uncovering fraud requires significant time and expertise. While researchers may flag suspicious research to funding agencies or the Office of Research Integrity, they often need to provide evidence that that the accused scientist acted “intentionally, knowingly, or recklessly.”

Schrag, for example, scrutinized and reported the 2006 study to the NIH, but only after an attorney investigating the experimental drug Simufilam paid him $18,000 to do so. (The attorney was representing clients who would benefit if Simufilam failed.) Without the payment, would Schrag have found it worthwhile to investigate? Probably not. And to conduct their own independent investigation into Schrag’s claims, it took experts from Science — a top academic journal — six months.

Yet if scrutinizing initially well-regarded and foundational research were normalized in the scientific community, with dedicated time and funding, it likely wouldn’t have taken 16 years and a legal investigation propelled by competitors’ interests to uncover the fraud.

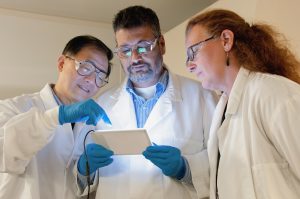

Illustration (Photo by National Cancer Institute / Unsplash)

Foundational research should periodically be reassessed also because technology enables more advanced measurements and comprehensive research methods that could make older studies — whose findings were once robust — outdated, if not obsolete. These reassessments would differ from identifying gaps in research, which is already a convention in scientific research. They would question the premise of the research avenue itself. Where finding gaps can modify knowledge and build upon a foundational premise, well-grounded whistleblowing reassesses the premise itself.

To enable such reassessments as a convention, journals should encourage more articles that specifically evaluate old, yet highly cited, research conclusions with the latest measurements and methodologies. If scientific data are seen as more dynamic in this way, it would become easier to question well-set foundations, normalizing whistleblowing too.

Leadership, such as department heads, have an important role to play as well. They should convey that whistleblowing is encouraged and that they’ll support and protect the whistleblower however necessary.

Whistleblowing should not need courage because it should be the norm: The very job of scientists is to advance their fields by questioning their knowledge. Yet we should seek not to undermine science, but to demand more of what science demands of itself: consistent and comprehensive improvement.

Aman Majmudar studies law, letters, and society at the University of Chicago.

This article was originally published on Undark. Read the original article.