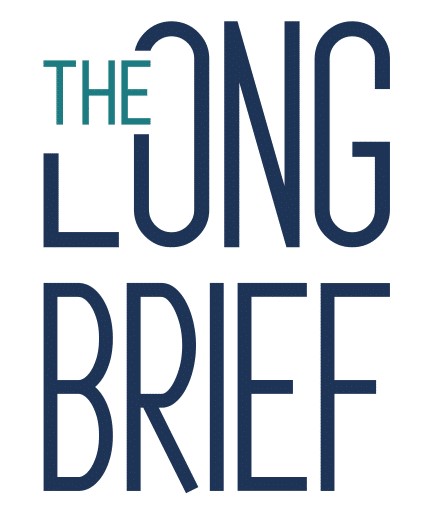

In March 2021, a Twitter user asked Martin Kulldorff if everyone needed to be vaccinated against Covid-19. Kulldorff, then a professor at Harvard Medical School, had spent 20 years researching infectious diseases and contributing to the development of the country’s vaccine safety surveillance system.

“No,” he responded. The vaccines were important for some high-risk people, he wrote, but “those with prior natural infection do not need it. Nor children.”

That advice put Kulldorf outside the mainstream in his field, and he soon faced consequences from Twitter (now known as X). The social network labeled the tweet as misleading and inserted a link offering users an opportunity “to learn why health officials recommend a vaccine for most people.” Twitter also limited the post’s ability to be retweeted and liked, Kulldorff said in a recent interview.

The experience spooked him. His account had already been suspended once, he said, and he didn’t want to risk a permanent ban. “I had to self-censor,” said Kulldorff. “I didn’t say everything I would have liked to say.”

He was far from alone. Over the course of the pandemic, major social media companies removed thousands of accounts and millions of posts that contained statements about Covid-19 flagged as false or misleading. These measures were part of an unprecedented pandemic-era effort to limit the spread of health misinformation at a time when the internet was awash with dubious claims of miracle cures, illness-inducing 5G networks, and vaccines that harbor microchips.

The Battle Against Misinformation: Symptom or Cure?

The question that some public health experts are now asking is whether those efforts ultimately made users healthier and safer. Some studies do suggest that exposure to health misinformation can have real-life consequences, including making some people less likely to want to get a Covid-19 vaccine. And several public health experts told Undark that it is perfectly reasonable for tech companies to remove or deemphasize patently false health claims.

Image: Undark

But there’s scant evidence those policies actually boosted vaccination rates or prevented harmful behaviors. Indeed, early studies analyzing vaccine misinformation policies specifically have found that such policies did little to reduce users’ overall engagement with anti-vaccine content. In addition, some public health researchers are concerned that posts from people like Kulldorff — an expert with informed-but-heterodox views — were caught up in a dragnet that stifled legitimate debate at a time when the science was still unsettled.

A Lawsuit Emerges

These and other incidents of content moderation are now part of a sprawling First Amendment lawsuit alleging that numerous federal agencies coerced social media companies into removing disfavored content from their platforms. Kulldorf is one of several plaintiffs.

Whether the government crossed the line from legal persuasion to illegal coercion will be a question for the courts to decide. Whatever the outcome of that case, however, the public health and misinformation research communities will be left with foundational questions of their own.

Chief among these is whether, in the midst of a pandemic emergency, efforts to control misinformation overstepped the evidence.

And if so, did these overzealous efforts have any negative effects, such as muting useful scientific disagreement, eroding public trust, or stymieing free speech?

Researchers also say that more evidence is needed to determine whether attempts to curb online health misinformation have the desired effect on public behavior, assuming a consensus definition for “misinformation” can even be found. To date, they say, there is little agreement on the best way to study these questions, and what evidence has been compiled so far paints a rather mixed picture.

“It’s still a very young field,” said Claire Wardle, a misinformation expert at the Brown University School of Public Health. In hindsight, “not everybody got everything right,” she said. “But you can’t deny that many, many people spent many, many hours desperately trying to figure out what the right thing to do was.”

Public Health: A Sector Prone to Misinformation?

Debate over just how to confront misinformation in the media reaches back decades, but for most of the internet era, online content moderation had little to do with public health. When social media companies took down posts, they mostly focused on removing excessively violent or disturbing material. That began to change after the U.S. election in 2016, when an organization tied to the Russian government used social media to impersonate U.S. citizens. Leaders from Facebook, Google, and Twitter were called before a congressional committee and grilled on their companies’ role in enabling foreign election interference. Major social media platforms went on to adopt more aggressive policies for moderating election-related content.

In 2019, public health officials also raised concerns about information circulating online. After a series of measles outbreaks, the Centers for Disease Control and Prevention blamed misinformation for low vaccination rates in vulnerable communities. Technology companies began to take action: Facebook reduced the visibility of anti-vaccine pages, among other changes. Twitter’s search engine began directing vaccine queries to public health organizations.

On its face, it can seem evident that exposure to a deluge of social media posts insisting, for example, that vaccines contain surreptitious microchips might well cause some people to hesitate before getting a shot. Researchers who spoke with Undark pointed to a pair of randomized controlled trials, or RCTs, that hint at the potential for misinformation to influence real-world behavior.

In one study published in 2010, German researchers randomly assigned individuals to visit a vaccine-critical website, a control website, or both. Spending just 5 to 10 minutes on a vaccine-critical website decreased study participants’ short-term intentions to vaccinate.

Laboratory Examination before Ocular Surgery during COVID-19 Pandemic in Bangladesh in 2020 (Photo: IAPB/Vision 2020 / Flickr.com)

More recently, in 2020, a London-based team of researchers randomly assigned more than 8 000 study participants to view either false information or factual content about Covid-19 vaccines.

The group that viewed the misinformation experienced about a 6-percentage-point drop in the number of participants saying they would “definitely” accept a vaccine.

Not all studies show such a clear connection, though. In June and July of 2021, a team of Irish researchers found that exposure to anti-vaccine content had little effect on study participants’ intent to get a Covid-19 shot. Perhaps counterintuitively, the study groups exposed to misinformation showed a slight uptick in their intention to vaccinate.

The authors concluded that misinformation probably does affect behavior, but its influence “is not as straightforward as is often assumed.”

RCTs are important, said Wardle. Their study design allows researchers to isolate a single variable, such as exposure to anti-vaccine content, and then analyze its effect. But, she added, these experiments only paint a partial picture.

For example, none of the three vaccine studies asked participants to share their vaccination records. Without these data, it’s unclear whether changes in survey responses accurately predicted changes in vaccination rates. Additionally, RCTs may not fully capture the myriad ways in which online misinformation spreads, said Wardle. A person might dismiss a piece of misinformation online. But if they encounter it again — say at a family gathering — they may be primed to accept it as truth.

“I think it’s important that we have RCTs, but it’s also important that we have a whole host of other methodologies” to understand the relationship between misinformation and human behavior, said Wardle.

Misinformation – No One Remedy

Even if false statements do cause real-world harms, it’s not clear how to best respond. Wardle and her co-authors recently reviewed 50 studies that looked at possible ways to mitigate Covid-19 misinformation. Many studies looked at a strategy called accuracy prompts, which ask users to consider a story’s accuracy before deciding whether or not to share it. Others examined the effects of inoculation: short lessons, often presented in video format, that teach people the tactics of misleading information before they encounter it in the real-world.

Wardle’s team found that such approaches only have a modest effect, though, and it’s unclear how long the effect lasts. “There’s different things that we should be doing,” she said, “but there isn’t the silver bullet.”

When the Covid-19 pandemic began, public health authorities had few evidence-backed strategies for responding to false information about the pandemic — let alone understanding its potential impact.

But, as the pandemic wore on, more and more policymakers and advocates began calling for stronger action against misinformation online. Often, they advocated for sweeping strategies that, some researchers now say, may have had limited effect.

It Took a Year for the United States’ Immune System to Kick In

Those concerns over misinformation reached a high point in the summer of 2021, in the midst of the Covid-19 vaccine rollout. That July, U.S. Surgeon General Vivek Murthy issued an advisory on health misinformation, describing it as “a serious threat to public health” that needed to be addressed with “a whole-of-society effort.” Covid-19 cases were rising, and roughly 7 months into the vaccine rollout, about one-third of U.S. adults remained unvaccinated. Polling indicated that many among them held false beliefs, such as the notion that the vaccines had been shown to cause Covid-19, or even infertility.

Alongside Murthy, other White House officials began raising the alarm about 12 accounts that, they said, were driving most of the false information online. “Anyone listening to it is getting hurt by it,” President Joe Biden told reporters. “It’s killing people.”

Former Vice President of the United States Joe Biden speaking with attendees at the Moving America Forward Forum hosted by United for Infrastructure at the Student Union at the University of Nevada, Las Vegas in Las Vegas, Nevada, on February 16th 2020. (Photo: Gage Skidmore / Wikimedia Commons)

Biden appeared to be referring to a report called The Disinformation Dozen, produced by the nonprofit Center for Countering Digital Hate. In a summary of the report, CCDH stated that decreasing or eliminating the visibility of the worst offenders “is the most effective way of stopping the proliferation of dangerous misinformation.” The report was widely cited by news organizations, including CNN, CBS News, The Washington Post, and The Guardian.

Facebook, now owned by Meta, eventually took action against the 12 accounts. But the company also pushed back against the report, saying that “focusing on such a small group of people distracts from the complex challenges we all face in addressing misinformation about Covid-19 vaccines.”

At least one analysis suggests that Facebook may have been right. A September study published in Science Advances found that from November 2020 to February 2022, Facebook’s vaccine misinformation policies “did not induce a sustained reduction in engagement with anti-vaccine content.” (Some of the same authors studied Twitter’s vaccine misinformation policy, too. That paper, which has not yet been peer reviewed, found Twitter’s policy may have been similarly ineffective.)

Facebook really did try, said the lead author, David Broniatowski, associate director for the Institute for Data, Democracy, & Politics at George Washington University. The company “clearly targeted and removed a lot of anti-vaccine content,” he said. But people simply shifted their attention to what remained.

“Just shutting down pages, deplatforming specific groups, is not particularly effective as long as there’s a whole ecosystem out there supporting that kind of content,” said Broniatowski.

Part of the problem, Broniatowski said, was that Facebook’s desire to remove anti-vaccine content was at odds with the platform’s fundamental design, which aims to help users build community and find information. The end result, he said, is a little bit like playing Whac-a-Mole with anti-vaccine content, only in this case, “the same company that is trying to whack the moles is also building the tunnels that the moles are crawling through.”

The Virus of Misinformation Subdues but Finds New Avenues to Spread

If a major social media company does ban an individual or a group, there can be unintended consequences, said Ed Pertwee, a research fellow at the Vaccine Confidence Project at the London School of Hygiene & Tropical Medicine. For one, research suggests that users whose accounts are removed often migrate to less regulated social media platforms like Gab or Rumble.

That transition to an alternative platform will usually limit a user’s reach, said Pertwee. But if the user migrates to a less-regulated site, they may come in contact with people who share their ideology or hold even more extreme views.

Pertwee’s own research has additionally found that deplatforming can give rise to victimhood narratives that prompt people to coalesce around a shared fear of persecution at the hands of big technology companies.

Pertwee also pointed out that while the information environment is an important influence on people’s attitudes toward vaccination, it isn’t the only influence — and it may not be the most important one.

A number of studies have linked vaccine hesitancy to distrust of politicians and medical experts, said Pertwee.

For this reason, he and his colleagues suggest that vaccine hesitancy should be viewed “primarily as a trust issue rather than an informational problem.” That’s not to say that misinformation doesn’t matter, they wrote in a 2022 Nature Medicine perspective, but public health officials should concentrate first and foremost on building trust.

It’s unclear why the Center for Countering Digital Hate put so much emphasis on banning users as a solution to vaccine hesitancy — or why the Biden administration chose to amplify the Disinformation Dozen report. CCDH initially agreed to do an interview with Undark in late September, but then cancelled repeatedly and ultimately declined to comment.

Colm Coakley, a spokesperson for CCDH, did not respond to a brief list of questions from Undark asking about the evidence behind the group’s recommendations.

Still No Consensual Definition on Opinions and Straight-Up Fake News

As social media companies and public health officials worked to moderate content during the pandemic, they ran into another question: What actually qualified as misinformation? In interviews with Undark, several researchers drew a distinction between posts that are demonstrably false or unsubstantiated — such as the rumor that the Covid-19 vaccines contain microchips — and posts that merely offer an opinion that falls outside of the scientific mainstream.

Some researchers say removing or suppressing the latter may have stifled debate at a time when Covid-19 science was new and unsettled. Further, policies that limited the spread of both types of posts created perceptions that prominent critics of public health authorities — including Kulldorff — were being silenced for their opposition to vaccine mandates and other contentious policies.

Kulldorff is perhaps best known as a co-author of the Great Barrington Declaration, a document that encouraged governments to lift lockdown restrictions on healthy young people while providing better protection for those at highest risk. Soon after it was published, the declaration became a lightning rod, with some public health officials characterizing it as a call to allow the virus to simply run its course through the population, regardless of the toll, as a way of quickly establishing herd immunity.

The declaration’s website appeared to be downranked in Google’s search results shortly after its publication, Kulldorff said in sworn testimony, and the declaration’s Facebook page was briefly taken down.

Later, in April 2021, YouTube removed a video of a policy roundtable, hosted by Florida Gov. Ron DeSantis, in which Kulldorff and other scientists stated that children did not need to mask at school during the pandemic.

Persuasion or Censorship?

None of this content should have been removed, said Jeffrey Klausner, a public health expert at the University of Southern California. Klausner spent more than a decade as a public health official in San Francisco in the 1990s and 2000s. At the time, he and his colleagues hosted community meetings where controversial views, including AIDS denialism, were aired. “We allowed those conversations to occur and were always interested in supporting open discussion,” he said.

Public health officials should still be relying on persuasion, not censorship, said Klausner, even in the age of the internet, when posts can quickly go viral. “To me the answer to free speech is not less speech,” said Klausner. “It’s more speech.”

Indeed, some critics see the impulse to shut down accounts as part of a broader desire among public health leaders to avoid debate over contentious topics, from mask mandates to the origins of the SARS-CoV-2 virus. Sandro Galea, dean of the Boston University School of Public Health, has written extensively about the value of free speech and debate within the public health community. In an interview with Undark, he said that it’s reasonable for social media companies to moderate patently false speech that could cause harm, such as the notion that vaccines contain microchips, or the denial of historical atrocities.

It’s the heterodox thinking that Galea worries is getting lost as public health goes through what he characterizes as an illiberal moment. In his new book, “Within Reason: A Liberal Public Health for an Illiberal Time,” Galea argues that during the pandemic, public health lost sight of certain core principles, including free speech and open debate.

“Insofar as Kulldorff was trying to generate a discussion, I think it is a pity not to allow discussion,” said Galea. Any short-term gains from removing or de-boosting such posts might be outweighed, he said, by the longer-term consequences of public health being seen as unwilling to allow debate and unwilling to trust people to understand the complexities inherent in navigating a new pathogen.

These issues have now spilled over into the legal case involving Kulldorff and others.

By law, social media companies are free to decide what kind of content their platforms will host. The lawsuit, recently renamed Murthy v. Missouri, hinges on whether the federal government censored protected speech by coercing social media companies to moderate certain content, particularly posts related to elections and the Covid-19 vaccines.

“It’s a hugely important case,” said Lyrissa Lidsky, a professor of constitutional law at the University of Florida Levin College of Law. “Increasingly, the government has discovered that it can go to social media companies and exert pressure and get things taken down,” she said. Liberals may be inclined to support the Biden administration’s interventions, she noted, but they may feel differently when someone else is in charge. “If Trump wins the next presidential election, and his people are in office,” she said, “do you feel comfortable with them deciding what misinformation is?”

In December, the American Academy of Pediatrics, the American Medical Association, and three other physician groups weighed in with a legal brief. The brief argues that the government has a “compelling interest” in fighting vaccine misinformation because “studies have shown that misinformation on this topic can decrease vaccine uptake, which in turn diminishes vaccines’ ability to control the spread of disease and reduces the number of lives saved.”

Overprotective and Overzealous Liberals vs. Republicans for Free Speech

All of this is unfolding within a broader political context in which Republicans are increasingly going on the offensive against misinformation researchers for their role in what congressional Republicans characterize as Biden’s “censorship regime.” January saw the formation of the House Judiciary Select Subcommittee on the Weaponization of the Federal Government, led by Ohio Republican Rep. Jim Jordan. Among other things the committee aims to investigate alleged cooperation between the government and social media companies to suppress right-wing voices. The committee has requested a range of documents from researchers who study misinformation.

The congressional investigation and lawsuit have had a chilling effect on misinformation research, according to some experts.

People have “become somewhat cautious and careful,” said K. Viswanath, a health communication professor at Harvard. In his view, allegations of censorship have been overstated. If a scientist wants to present an alternative opinion, he said, there are plenty of forums available, including scientific journals and blogs run by scientific organizations. Airing those dissenting scientific views on social media, Viswanath continued, can contribute to public confusion and even cause harm — an outcome that content moderators may reasonably wish to avoid.

Recently, Wardle has been reflecting on how the field of misinformation research responded to Covid-19. In her view, the impulse to manage online speech went too far. Like Galea, she thinks some content moderation is important, but worries that, by not limiting those efforts to obviously false speech, the field became vulnerable to legal challenges from the states, which has had a chilling effect on future research. “I think the failure of really communicating effectively about the complexities of an issue is what led us to this fight around censorship,” she said. Particularly in the case of Covid-19 pandemic, where so much of the science was truly unsettled, the platforms should have let people say what they wanted, she said. “But let’s be smarter at saying why we think the way that we do,” she added.

“Rather than saying these people are crazy, get them and say, ‘Look, we both went to medical school. I’m reading this journal and saying this, you’re reading the same journal and saying that: why?”

This article was originally published on Undark. Read the original article.